Telling the tale of two tetrations

Many people are familiar with exponential growth. We use it to model many things, including compounding interest and viral spread. To many, “exponential growth” is synonymous with “really fast growth”. Of course, there are faster growing functions, but they don’t come up in as many places. One of those faster growing functions, is tetration.

It’s rare to see any examples of tetration “in the wild”. That’s why I found it really funny when tetration showed up in not one, but two different nerd-game challenges in 2022. In this post, I’ll describe a basic algorithm that leads to tetration, and then show how it helped set two records. One of them is my own “inefficiency challenge” in Opus Magnum. The other comes from users “squareroot12621” and “Pavgran” on the Conway’s Game of Life forums, to find the best engineered “diehard” in a given starting area.

Fast Growth

Googol and friends

There’s an entire field of googology devoted to big numbers and easy ways to get to them. The namesake of googology is the googol, first coined in 1920 to refer to a 1 with a hundred zeros after it. Now, it takes about 80 digits to express the number of particles in the universe. Anything larger, and there is no longer a physical reason for it. But human fascination isn’t bound by physical reasons. And so, we made larger numbers, like a googolplex.

A googol can be written very simply in scientific notation: 10100. A googolplex is 10googol. A googolplex is so large, that it is no longer reasonable to try to write it in proper scientific notation. That would involve a 101 digit number in the exponent. Since the number in the exponent has a name, I just cheated and wrote it there instead.

You can go bigger as well, 10googolplex is certainly a valid number, I’ve seen it called a “googolplexian” or a “googolduplex”.

Smarter not harder

These are big numbers, in the sense that nobody ever will need to use them to describe the real world. But they are very small numbers once you actually build out the mathematical framework known as the “fast growing hierarchy”. Notice that we got from a hundred, to a googol, to a googolplex, to a googolplexian by just repeating the same thing over and over. The fast growing hierarchy optimizes that process of doing something over and over, continuously exhausting all other notation we might use to compare these large numbers. Stuff gets very, very big.

I won’t go into the entire fast growing hierarchy here, since tetration is definitely a very early stop. In fact, it’s the first stop past exponentiation, which is what we were just doing.

Tetration

I know that we started with 10100. But humor me for a bit, and let’s write 1010 instead. I’ll call this the “nerfed googol”. This is a more ordinary number, ten billion. It’s more than the number of people alive today, but less than the total number of people who have lived in the past hundred years. There are people with enough wealth to buy ten billion cheeseburgers. Y’know, hypothetically.

If we make our “nerfed googolplex” as 10nerfed googol, we once again have a number that nobody can ever relate to things in the universe. A ten-billion-and-one digit number. And our “nerfed googolplexian” is larger still.

I nerfed things so that we can write them exactly using tetration. Nerfed googol is 10 ↑↑ 2. Nerfed googolplex 10 ↑↑ 3, and nerfed googolplexian 10 ↑↑ 4.

Up arrows?

Tetration is a function. The inputs to the function are the base and the “height”. Written between them, signifying tetration, are two up-arrows. The arrows system was built by renowned computer scientist Donald Knuth, and goes much further than tetration, if you’re curious.

When you write a ↑↑ b, this means writing a, b times, taking exponents from right to left.

In other words, tetration is the function which raises a fixed number to the power of the previous result, on repeat. It is exactly what we did to get from googol to googolplex to googolplexian, using 10 as our base. If you wanted to find out what 10 ↑↑ 8 was, you would take 10(10 ↑↑ 7).

Reduced specificity

Why did I have to “nerf” the googol series of numbers to write them exactly? How would you write an ordinary googolplexian using tetration? Well, there isn’t a clean way. In the fast growing hierarchy, one thing is very clear. Getting to bigger numbers, means reducing how many details you keep around. The height of the exponential tower is more important than the number at the top. So while a googolplexian is definitely more than 10 ↑↑ 4, because it has a 100 at the top instead of a 10, it’s also definitely less than 100 ↑↑ 4.

If you do some tricks with logarithms, you can show that a googolplexian is between 56 ↑↑ 4 and 57 ↑↑ 4. Meanwhile, 10 ↑↑ 5 is much, much bigger. A lot of the times you will see large numbers bounded by “we know it is more than this, and less than that”. Exact answers are rare. That’s just the nature of it.

What about non-whole numbers? It turns out that on the right (the height), this isn’t well defined. There’s not one perfect function that matches tetration across the entire real number line, the way there is for exponentials, so 10 ↑↑ 4.2 might be greater than or might be less than a googolplexian. But you can write non-whole numbers on the left (the base). So you could write that a googolplexian is between 56.84 ↑↑ 4 and 56.85 ↑↑ 4, and that would be sound and correct.

An Algorithm

Ok, so tetration makes big numbers. Now let’s try to find a somewhat-tangible process that actually has tetration as its answer.

The golf addict

Let’s say you like to golf, a lot. Enough that you are happy doing it basically for the rest of time. You go out to the driving range to practice, where they have buckets that can hold as many golf balls as you ever wanted.

The golf ball dispenser machine puts a ball in the bucket every second. You leave a bucket filling while you go use up your current bucket. Each shot takes a few seconds, say 5, so when you finish your bucket and go back to get the next one, it has 5x more golf balls than the one you just finished. Great! Swap it out for a new empty bucket, bringing the new balls to the tee for more.

Exponential growth

This is exponential growth. Each time you go back and get the next bucket, it has been filling the entire time you use up the previous one. It fills faster than you use it up, so you end up with 5x the golf balls each time. You always grab a new empty bucket and set it up before leaving. This keeps going, day and night. You could do this forever.

The buckets

You could do this with just two buckets, one getting filled and one getting used up. But you choose instead to get a new bucket every time. After all, the staff left a nice stack of them next to the ball dispenser. But as you work through bucket after bucket, the stack is finally starting to get low.

It turns out, the staff has been restocking the ball machine each morning by bringing in one bucket, holding a day’s worth of golf balls. After filling the machine, they add that used bucket to a second stack, in the back room. At a rate of one bucket per day, the second stack is growing. Once you finally have used up the stack of buckets right next to the machine, the staff kindly brings out the new stack.

All of these processes keep repeating. You use up a bucket of balls, grab the next one, set up a new bucket to fill. Once per day the staff adds a bucket to their own stack. If you use up the current stack of buckets, they replace that stack with the one they have been growing.

Tetration achieved

While the number of balls in a bucket has been implementing exponential growth, the number of buckets in a stack grows much more strongly. It turns out, it grows like tetration.

Explanation

The easiest way to see this, is to consider when each event happens. We didn’t really say how many balls were in your first bucket, or how many buckets were in the first stack. These details don’t really matter (they change what the number at the top of the power tower is, and little else). So let’s just say that the first bucket had a single ball, and the stack had 20 buckets.

5 seconds later you get the second bucket. 25 seconds later, the third. 125 seconds later, the fourth.

But exponential growth is no joke. By the end of the day, you are working through your 7th bucket. By the time a year has passed, you are working through your 11th bucket. A thousand years? 15th bucket.

Pretty soon the scale of “one bucket added to the backup stack every day” is a lot more daunting. But just as you have two buckets, one filling and one being used up, there are also two stacks. One growing, and one getting used up. So it is guaranteed that you will eventually (hypothetically), use up any stack that you are given. It’s just that by the time you do, the number of buckets in the next stack, is a lot larger.

You finally use the 20th bucket after 3.8 million years. The new stack that gets brought out, has a billion buckets. Give or take.

Each bucket still taking 5 times longer than the previous, you use this stack up after around 10700000000 years.

Math

Let’s try to convince ourselves that this is actually tetration, in addition to just golf torture. The following things are all proportional: time passed, golf balls dispensed, buckets placed. This is guaranteed because we defined constant rates for golf balls (one per second) and buckets (one per day).

The nth bucket has 5n balls in it once filled. By geometric series, buckets n through k have a total of 1.25(5k – 5n-1) balls. So we can find out how many balls need to get hit to go through a stack of buckets. And using proportionality, that tells us how many buckets there will be in the next stack.

At “leading order”, where we only keep the important exponent, the number of days it takes to get through a stack ending on the kth bucket, looks like some multiple of 5k. So the next stack has around 5k buckets, which takes (again at leading order), 5that days. By mapping a value into the exponent on every iteration, we increase the size of the power tower. This is tetration. The number of buckets in the Nth stack, is around 5 ↑↑ N.

With the algorithm mostly explained, let’s find it in two concrete examples.

Opus Magnum

Back in February, I posed a challenge in Opus Magnum. Make a solution to the puzzle “Face Powder” from the first chapter, which fits completely on screen, and with the highest cycle count you can.

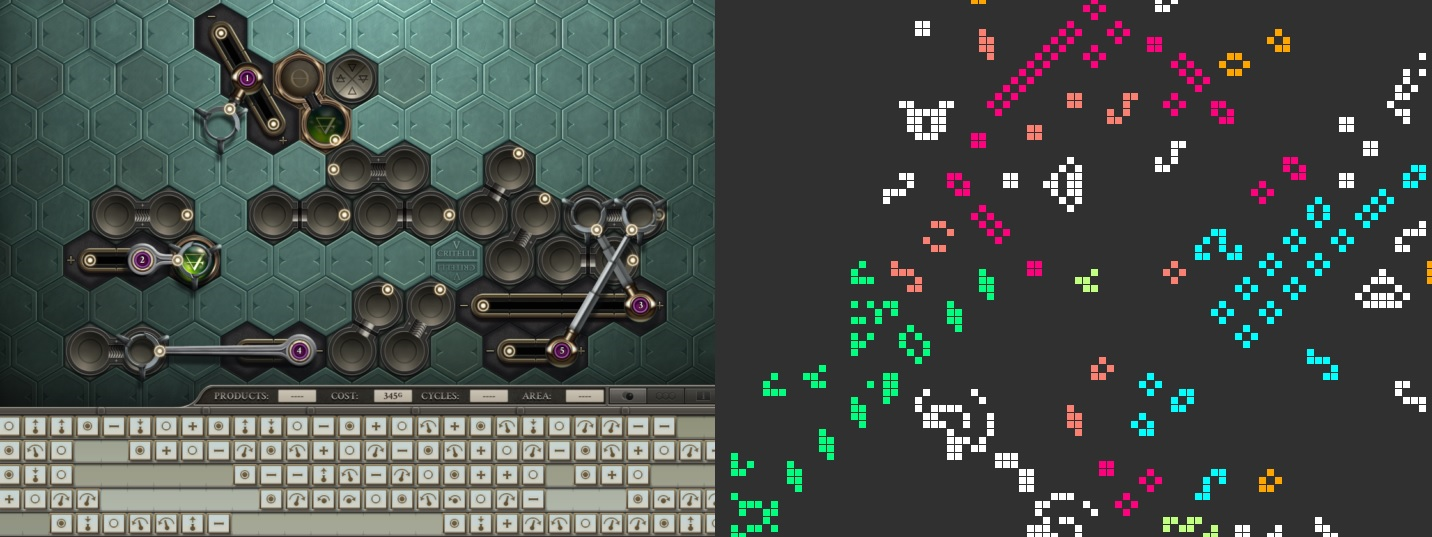

“Fits completely on screen” needs to be more precisely defined. Firstly, the requirement to fit on screen only applies to the machinery and instructions. You can build buffers of atoms that go off-screen during runtime. But that is still very restrictive. My own screen fits this much:

It’s about 15 tiles wide and 8 tiles tall. The programming tray fits 6 arms and 29 instructions each. For my challenge, you needed to use that much and no more.

The reason for constraining the space was because of a few ways to make an arbitrarily slow machine. You can add any fixed number of cycles to the result by having the arms which build outputs, start arbitrarily late. You can add cycles by having machinery in two very distant places which have to build a molecule connecting the two. By starting everything on screen, there was no trickery like that. You had to be slow using an algorithm.

Exponentially slow

The first algorithm I came up with was capable of exponential growth. The stick being pushed out the left, grows by 2 atoms each time the instructions execute. This is the bucket filling with balls from the dispenser. Meanwhile the stick at the top, moves only one atom to the right each time the instructions execute. This is analogous to processing the balls (e.g. hitting them). So when the stick at the top is replaced by the new stick on the left, the replacement will always be around twice as long as before.

The condition to do new behavior, is very simple. The piston in the gif above (golden arm base instead of silver), tries to grab a specific tile. When the stick is moving up and to the right, the grab will miss. When the stick has reached the end, the grab lands.

Each arm in this solution has 8 instructions, and the output (plus a lot of waste) is made once every time the “bucket” is used up. That’s only exponential, I wanted to try to make something slower. I needed to create a condition equivalent to the stack of buckets, and use them up one at a time.

Hitting the limits

With only 29 instructions per arm, I needed to plan carefully how to implement this added complexity. The same instructions would have to handle many tasks. I found a way, in 5 arms with pretty full instruction tapes.

There are a lot of conditions here based on the presence or absence of atoms. See the section about logic gates and ghost atoms in my computer post for more about how those work. Arm 3 is the hardest worker of the bunch. It is responsible for fetching a new stack of buckets, if the old one is used up. It gets that stack precisely from the stick that was otherwise waste of the exponential machine.

See below for two videos of different cases in execution. Both are shown just as the exponential condition is about to act. In the top case, the stack of buckets is used up, so arm 1 makes an output and arm 3 obtains a new stack. In the bottom case, arm 3 has a stack, so it holds an atom that prevents the output. The atom and the output both get passed to the bottom left by arm 4, and no output is made. One bucket used, several more to go.

Unfortunately, the majority of this machine is a loop that looks like this, just working through one atom at a time until the exponential condition finally acts.

Runtime

Opus Magnum is not a well optimized game. I cannot simulate this solution out to cycle 4 ↑↑ 6 to get a results screen or a gif. My computer will churn attempting to move a molecule with tens of thousands of atoms, and that needs to happen trillions of times. But, since I know how the game works, I can figure out what its cycle count should be.

Important cycle numbers

The cycles when arm 1 successfully pushes atoms onto the bonder, are as follows:

39, 184, 793, 3258, 13147, 52732, 211101, 844606, 3378655, 13514880..

These come from an exact expression. There’s some extra factors and complication, but it roughly quadruples every time.

This is the exponential portion, with all the hairy details.

Bucket counts

On cycle 39 there is no waste, no buckets, and no output. It’s just part of how this particular machine initializes. Eventually it all comes together. On cycle 793 there is waste, which becomes a stack of 18 buckets. Two outputs have been made, the next one isn’t made until the bucket stack gets used up.

| Cycle count | waste? | buckets? | output? |

|---|---|---|---|

| 39 | no | 0 | no |

| 184 | no | 0 | yes |

| 793 | yes | 0 | yes |

| 3258 | yes | 18 | no |

| 13147 | yes | 17 | no |

| 52732 | yes | 16 | no |

| 211101 | yes | 15 | no |

And later on..

| Cycle count | waste? | buckets? | output? |

|---|---|---|---|

| 56685932809579 | yes | 1 | no |

| 226743731238924 | yes | 0 | yes |

| 906974924956333 | yes | 7818749352982 | no |

| 3627899699825998 | yes | 7818749352981 | no |

Some 7.8 trillion rows later, the cycle count is 104707356167665 and the 4th output is finally made. And the number of buckets becomes a number of similar magnitude.

How did the 7.8 trillion number come to be? The only thing left to exactly specify the cycle count, would be to have a formula for the next bucket count. And one exists:

In this formula, a is the starting row and b is the number of buckets. The formula outputs the next number of buckets. a = 4 and b = 18 gives 7818749352982. a = 22 and b = 7818749352982 gives a number reasonably close to 104707356167665, which was also the cycle count corresponding to the 4th output. The small multiplicative factor between buckets and cycles gets swallowed up in the power tower.

Bounds

Carrying these expressions out to 6 outputs, we have a repeated leading iteration of x → 4x+1 / 9. As it turns out, the correct base to put in this case, ends up being 44/9, around 1.85. Then what is the height?

Well, 44/9 ↑↑ 4 is around 69 (nice). 44/9 ↑↑ 5 is around 1018. The cycle count of our 3rd output is comfortably between those values. And since leading order is definitely the main contributor from here onwards, that remains the case. The cycle count of our 4th output is between 44/9 ↑↑ 5 and 44/9 ↑↑ 6. Our 5th output, 44/9 ↑↑ 6 and 44/9 ↑↑ 7. The puzzle completes with 6 outputs dropped. The complete cycle count is between 44/9 ↑↑ 7 and 44/9 ↑↑ 8. As a safe lower bound, I can say 44/9 ↑↑ 7 cycles, and that is a very safe lower bound.

More precise work in base 10, can show that the cycle count exceeds 10 ↑↑ 5, but not 10 ↑↑ 6. 10 ↑↑ 5 happens to be a larger lower bound than 44/9 ↑↑ 7, which is nice.

One more arm

Of course, this didn’t squeeze out every last bit of performance. It just was the first design to pull off tetration. I had a little more screen space and one more arm I could add. So I found a truly diabolical task for the final arm. It would make it so that only one in 7 outputs actually made it to the output glyph. This linear filter, changed the cycle estimate from 10 ↑↑ 5 to 10 ↑↑ 41. That’s a nice improvement.

The six outputs to complete the puzzle would drop on cycle counts (very approximately) 10 ↑↑ 6, 10 ↑↑ 13, 10 ↑↑ 20, 10 ↑↑ 27, 10 ↑↑ 34, and 10 ↑↑ 41.

The machine with that behavior is found on my YouTube channel here:

Conway’s Game of Life

I love Opus Magnum, and I love Conway’s Game of Life. I didn’t expect to have them collide in this way.

On March 30th, “squareroot12621” posed the following challenge in the CGoL forums.

- Design a pattern that eventually has population 0 (a diehard).

- The initial bounding box cannot have an area of more than 10000. (This is to rule out arbitrarily large patterns, e.g. a glider crashing into a blinker from far away)

Diehards are fun novelties in the game of life. An example of a natural diehard is shown below, starting as a random patch of on and off cells, and vanishing on generation 658:

First attempts

The first submissions consisted of a blueprint like the following:

During “time wasting”, the two spaceships would cover some ground, the medium one much further than the slow one. Eventually the fast spaceship would start to chase down the medium spaceship, colliding and leaving debris. If the difference in speed was small, then the amount of time and distance between the original box and the debris, would be very large. Finally, the slow spaceship would wander its way slowly to that debris, crash into it, and the population would reach 0.

Don’t worry that you can’t really see the details of individual cells here. The overview here is meant to be conceptual, so this way-zoomed-out scale is fine.

Compare and contrast

This was a nice concept, thinking “outside the box” in a sense. By having something leave the initial confined space, you could take advantage of sizes much larger. Sound familiar?

This challenge actually holds a ton of resemblance to the Opus Magnum inefficiency challenge. Your initial configuration must be small. The design must do something that a random design is unlikely to do (OM: solve the puzzle without crashing, CGoL: die completely). The goal is to use some cleverness to stall as long as possible. The cleverness could and should take advantage of space outside the initial configuration.

An exponential machine

It wasn’t too long before user “Pavgran” was able to find an algorithm with an exponential behavior. Some interaction needed to happen N times before the pattern would die, and the number of generations this would take, looked like somethingN. The key piece of machinery, is called the “sawtooth”.

Much like the fast/medium spaceship chase above, a sawtooth involves ships of nearly-matched speeds. In this case the spaceships are the basic glider, and the memorably-named 58P5H1V1 shown below.

The glider is the faster of the two ships, taking 4 generations to move one tile diagonally where the ship here takes 5. However, instead of destroying on impact, the gliders in a sawtooth let the 58P5H1V1 keep on going.

Gliders are sent in pairs. If they hit the ship, it forms a block behind the still-functional 58P5H1V1. If they hit the block, they pull the block back by one tile. Just one.

Three views of the sawtooth are shown below.

The block pull is excruciatingly slow, and in the time spent pulling, the 58P5H1V1 covers a lot of distance. The expansion factor here is 121. The Nth block is deleted on generation 58 * (121N – 1) + 15.

A diehard

This is slow and all, but it won’t reach population 0 unless something can actually destroy it. Fortunately, a different set of gliders can do the trick. All someone needed to do was create a machine that absorbs the first N signals, and then on the final signal, break everything in the original bounding box and launch a kill squad of gliders towards the runaway spaceship.

On April 7th, Pavgran succeeded. 418 complete block pulls ended in minor changes to the pattern, and the 419th caused utter destruction. Because of the immense distance between the initial bounding box, and the eventual position of the 58P5H1V1, I can’t reasonably show it here. But the final destruction effort is worth a watch:

Many objects exist only to shape the final destruction or bounce gliders into the appropriate lanes. The sum total of everything that shoots down and to the left during this video, exactly destroys the 58P5H1V1. Pavgran had built a diehard with a lifespan around 10870.

Next on our sights was tetration.

Return of the algorithm

In this sawtooth-based diehard, it’s possible to find analogies for each part of the golfer algorithm. The two fixed rates, of balls used and balls dispensed, are the fixed rates that gliders are fired from the main pattern, and the rate the 58P5H1V1 flees. The “get a new bucket” signal is the thing that happens 419 times in Pavgran’s original design. To take it to the next level, we need to build stacks of buckets that take up a lot of space. If we can get several stacks of buckets using the algorithm from the earlier section, then we leave 419 in the dust.

Design

The best way to do this, is to use the other barrel of the 58P5H1V1. Because the 58P5H1V1 is symmetric, it can support two sawtooths (sawteeth?), one on each side.

In the gif above, the upper barrel is being suppressed by a collision. Right above the base of the arrow, the 2 gliders that would be shooting out this barrel, crash into a 3rd glider from below, and the rubble turns into a different glider.

The only way that this barrel actually sends out a glider pair, is if the block pull from the other barrel gets rid of that intercepting glider. That is, the top barrel only fires when it is time to get a new bucket.

Apart from its timing, the top barrel works in the exact same way. If the first thing the gliders hit is the 58P5H1V1, then it forms a block. If the gliders hit the block instead, they pull it back by one tile.

The line of squiggles right above the arrow, get used up one by one when the block gets pulled all the way back. Therefore, this design can “get a new stack of buckets” 6 times. On the 7th, it goes haywire. But as in all other tetration machines, this new stack of buckets is massive. Every time it is an exponential function of the previous number of buckets.

The diehard

Cells here are color-coded according to their function. White cells are the core engine, which includes the double barreled gun design and the 58P5H1V1. Blue cells help delay the firing of either barrel, at the start. Light green cells stop a block pull without deleting the intercepting glider, which delays the firing of the top barrel at the start. Green “gets a new stack of buckets,” contributing to the height of the tetration tower. Salmon color builds the firing squad that brings down the 58P5H1V1. Orange ensures that the rest of the pattern dies cleanly.

The bounding rectangle is 104 by 96, which is 9984, barely under the limit of 10000. Already there is a bit of “space dust” in the bottom center in white, that is needed to build an object slightly outside the bounding box. By generation 65, it turns into the bottommost “eater” still life from the previous gif. This optimization was necessary to get the design to fit at all.

Runtime

Pavgran did a lengthy analysis of the runtime of this diehard (edit: actually a slightly improved diehard with one extra blocking object – see link for discussion) in the CGoL forums. I’ll link to the full detail, but the answer is a system of equations.

u0 = 7

u1 = u0 + 119287080322267 * 121u0 – 512

u2 = u1 + 119287080322267 * 121u1 – 508

u3 = u2 + 119287080322267 * 121u2 – 504

u4 = u3 + 119287080322267 * 121u3 – 500

u5 = u4 + 119287080322267 * 121u4 – 496

u6 = u5 + 119287080322267 * 121u5 – 492

u7 = u6 + 119287080322267 * 121u6 – 488

v = u7 + 119287080322267 * 121u7 – 484

T = 4 * 119287080322267 * 121v + 2054

Where the repeated leading iteration of the Opus Magnum machine was x → 4x+1 / 9, the repeated leading iteration of this diehard is x → 119287080322267 * 121x. That fantastic 119 trillion constant comes out of the compounding effects of having delayed the first launch from the top barrel by so long. As large as it is, it has almost no effect compared to the height of the tetration tower itself.

To compare it to actual base 10 tetration towers, this value seems to be between 10 ↑↑ 11 and 10 ↑↑ 12.

Improvement

There wasn’t such an obvious room for improvement here as “we can still add one more arm.” But there was still some squeezing to be done. Users found more ways to start with space dust, buying rows for additional “new stack of buckets” reset objects. At the time of writing, the record fits in a 111 by 90 rectangle, a tight 9990 cells in area. With hot pink encoding another layer of trifling early delay, the main improvement is just to the green cells determining the height of the power tower. The mess here eventually becomes 11 separate “new stack of buckets” objects.

The runtime of this pattern is between 10 ↑↑ 14 and 10 ↑↑ 15 generations, and then it dies completely.

What next?

I thought it was fascinating to see the same ideas play out in two different universes. Both of the problems being solved were purely recreational math, but they are the kind of thing that I enjoy a lot. Tetration algorithms start to break people’s brains, with questions like “How many digits in the number? Oh that’s too big too? Uh, how many digits in the number of digits? Yikes!” But despite that, tetration still produces values that are easily overpowered by other algorithms.

Bigger and slower?

I would be interested in building an Ackermann function computer in Opus Magnum. It’s already been done by Shufflepants, but that took up quite a lot of space. Nevertheless, I think I have a better shot flexing my Opus Magnum chops to optimize this down to my screen, than I do of making any progress in Conway’s Game of Life.

The Ackermann function takes much better advantage of the fast growing hierarchy, and basically exhausts the power of Knuth’s up arrows. There are even faster growing functions that one could implement algorithmically, such as the one to define Graham’s number (barely bigger than Ackermann), and the ones involved in Goodstein sequences (far far bigger than Ackermann). But these are too complex for me to imagine stuffing into a reduced space like this. Other functions that don’t have an underlying algorithm, such as TREE or busy beaver, don’t count here at all. But because these challenges are fundamentally very busy-beaver-esque (the longest running thing in a small design space that must meet a certain criterion), there’s bound to be something I wouldn’t ever dream of. Please, any reader who cares about either of these universes, try it out. Make something cool. I would love to see it!

What next, but more meta

As for the blog itself, I keep finding more that I want to write about. This was a brief diversion from the tournament-focused posts. I will be back soon with a post about the computation puzzle from the 2022 Opus Magnum tournament, but there are still a handful more ideas I want to write about after that.